8.6 The Regression Anatomy Formula

We make the claim in live session that we can re-represent a coefficient that we’re interested in as a function of all the other variable in a regression. That is, suppose that we were interested, initially, in estimating the model:

\[ Y = \hat\beta_{0} + \hat\beta_{1} X_{1} + \hat\beta_{2} X_{2} + \hat\beta_{3}X_{3} + e \] that we can produce an estimate for \(\hat\beta_{1}\) by fitting this auxiliary regression,

\[ X_{1} = \hat\delta_{0} + \hat\delta_2X_2 + \hat\delta_3X_3 + r_{1} \]

And then using the residuals, noted as \(r_1\) above, in a second auxiliary regression,

\[ Y = \gamma_0 + \gamma_1 r_1 \]

The claim that we make in the live session is that there is a guarantee that \(\beta_1 = \gamma_1\). Here, we are first going to show that this is true, and then we’re going to reason about what this means, and why this feature is interesting (or at least useful) when we are estimating a regression.

Suppose that the population model is the following:

\[ X_{1} = \begin{cases} \frac{1}{10}, & 0 \leq x \leq 10, \\ 0, & otherwise \end{cases}, \\ X_{2} = \begin{cases} \frac{1}{10}, & 0 \leq x \leq 10, \\ 0, & otherwise \end{cases}, \\ X_{3} = \begin{cases} \frac{1}{10}, & 0 \leq x \leq 10, \\ 0, & otherwise \end{cases} \] And, furthermore suppose that \(Y = g(X_{1}, X_{2}, X_{3}\), specifically, that:

\[ Y = -3 + (1\cdot X_1) + (2\cdot X_2) + (3\cdot X_3) \] Then, because we know the population model, we can produce a single sample from it using the following code:

Code

## x1 x2 x3 y

## 1 2.574453 2.0164855 5.864455 22.22699

## 2 3.533997 0.6302335 6.119277 19.27601

## 3 1.730922 1.9090746 3.126370 10.88401

## 4 5.977906 1.6324287 7.661009 29.98035

## 5 2.935329 3.9556559 8.179117 32.75561

## 6 4.530804 8.9397239 3.170691 25.86899Notice that when we made this data, we included a set of random noise at the end. The idea here is that there are other “things” in this universe that also affect \(Y\), but that we don’t have access to them. By assumption, what we have measured in this world, \(X_1, X_2, X_3\) are uncorrelated with these other features.

8.6.1 Estimate an OLS Regression

Let’s begin by producing an estimate of the OLS regression of Y on these X variables. Notice the way that we’re talking about this:

We are going to regress Y on \(X_{1}\), \(X_{2}\), and \(X_{3}\).

## (Intercept) x1 x2 x3

## -3.081193 1.010980 2.014148 2.9864168.6.2 Regression Anatomy and Fritch Waugh Lovell

The claim is that we can produce an estimate of \(\hat{\beta}_1\) using an auxiliary set of regression estimates, and then using the regression from that auxiliary regression.

If we look into the structure of model_aux we can see that there are a ton of pieces in here.

## List of 12

## $ coefficients : Named num [1:3] 4.9843 -0.1222 0.0528

## ..- attr(*, "names")= chr [1:3] "(Intercept)" "x2" "x3"

## $ residuals : Named num [1:100] -2.473 -1.696 -3.185 0.788 -1.998 ...

## ..- attr(*, "names")= chr [1:100] "1" "2" "3" "4" ...

## $ effects : Named num [1:100] -46.738 -3.391 1.375 0.934 -2.047 ...

## ..- attr(*, "names")= chr [1:100] "(Intercept)" "x2" "x3" "" ...

## $ rank : int 3

## $ fitted.values: Named num [1:100] 5.05 5.23 4.92 5.19 4.93 ...

## ..- attr(*, "names")= chr [1:100] "1" "2" "3" "4" ...

## $ assign : int [1:3] 0 1 2

## $ qr :List of 5

## ..$ qr : num [1:100, 1:3] -10 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 ...

## .. ..- attr(*, "dimnames")=List of 2

## .. .. ..$ : chr [1:100] "1" "2" "3" "4" ...

## .. .. ..$ : chr [1:3] "(Intercept)" "x2" "x3"

## .. ..- attr(*, "assign")= int [1:3] 0 1 2

## ..$ qraux: num [1:3] 1.1 1.15 1.09

## ..$ pivot: int [1:3] 1 2 3

## ..$ tol : num 1e-07

## ..$ rank : int 3

## ..- attr(*, "class")= chr "qr"

## $ df.residual : int 97

## $ xlevels : Named list()

## $ call : language lm(formula = x1 ~ x2 + x3, data = d)

## $ terms :Classes 'terms', 'formula' language x1 ~ x2 + x3

## .. ..- attr(*, "variables")= language list(x1, x2, x3)

## .. ..- attr(*, "factors")= int [1:3, 1:2] 0 1 0 0 0 1

## .. .. ..- attr(*, "dimnames")=List of 2

## .. .. .. ..$ : chr [1:3] "x1" "x2" "x3"

## .. .. .. ..$ : chr [1:2] "x2" "x3"

## .. ..- attr(*, "term.labels")= chr [1:2] "x2" "x3"

## .. ..- attr(*, "order")= int [1:2] 1 1

## .. ..- attr(*, "intercept")= int 1

## .. ..- attr(*, "response")= int 1

## .. ..- attr(*, ".Environment")=<environment: R_GlobalEnv>

## .. ..- attr(*, "predvars")= language list(x1, x2, x3)

## .. ..- attr(*, "dataClasses")= Named chr [1:3] "numeric" "numeric" "numeric"

## .. .. ..- attr(*, "names")= chr [1:3] "x1" "x2" "x3"

## $ model :'data.frame': 100 obs. of 3 variables:

## ..$ x1: num [1:100] 2.57 3.53 1.73 5.98 2.94 ...

## ..$ x2: num [1:100] 2.02 0.63 1.91 1.63 3.96 ...

## ..$ x3: num [1:100] 5.86 6.12 3.13 7.66 8.18 ...

## ..- attr(*, "terms")=Classes 'terms', 'formula' language x1 ~ x2 + x3

## .. .. ..- attr(*, "variables")= language list(x1, x2, x3)

## .. .. ..- attr(*, "factors")= int [1:3, 1:2] 0 1 0 0 0 1

## .. .. .. ..- attr(*, "dimnames")=List of 2

## .. .. .. .. ..$ : chr [1:3] "x1" "x2" "x3"

## .. .. .. .. ..$ : chr [1:2] "x2" "x3"

## .. .. ..- attr(*, "term.labels")= chr [1:2] "x2" "x3"

## .. .. ..- attr(*, "order")= int [1:2] 1 1

## .. .. ..- attr(*, "intercept")= int 1

## .. .. ..- attr(*, "response")= int 1

## .. .. ..- attr(*, ".Environment")=<environment: R_GlobalEnv>

## .. .. ..- attr(*, "predvars")= language list(x1, x2, x3)

## .. .. ..- attr(*, "dataClasses")= Named chr [1:3] "numeric" "numeric" "numeric"

## .. .. .. ..- attr(*, "names")= chr [1:3] "x1" "x2" "x3"

## - attr(*, "class")= chr "lm"To evaluate our claim, we need to find the residuals from this regression. As a knowledge check, what is it that we mean when we say, “residual” in this sense?

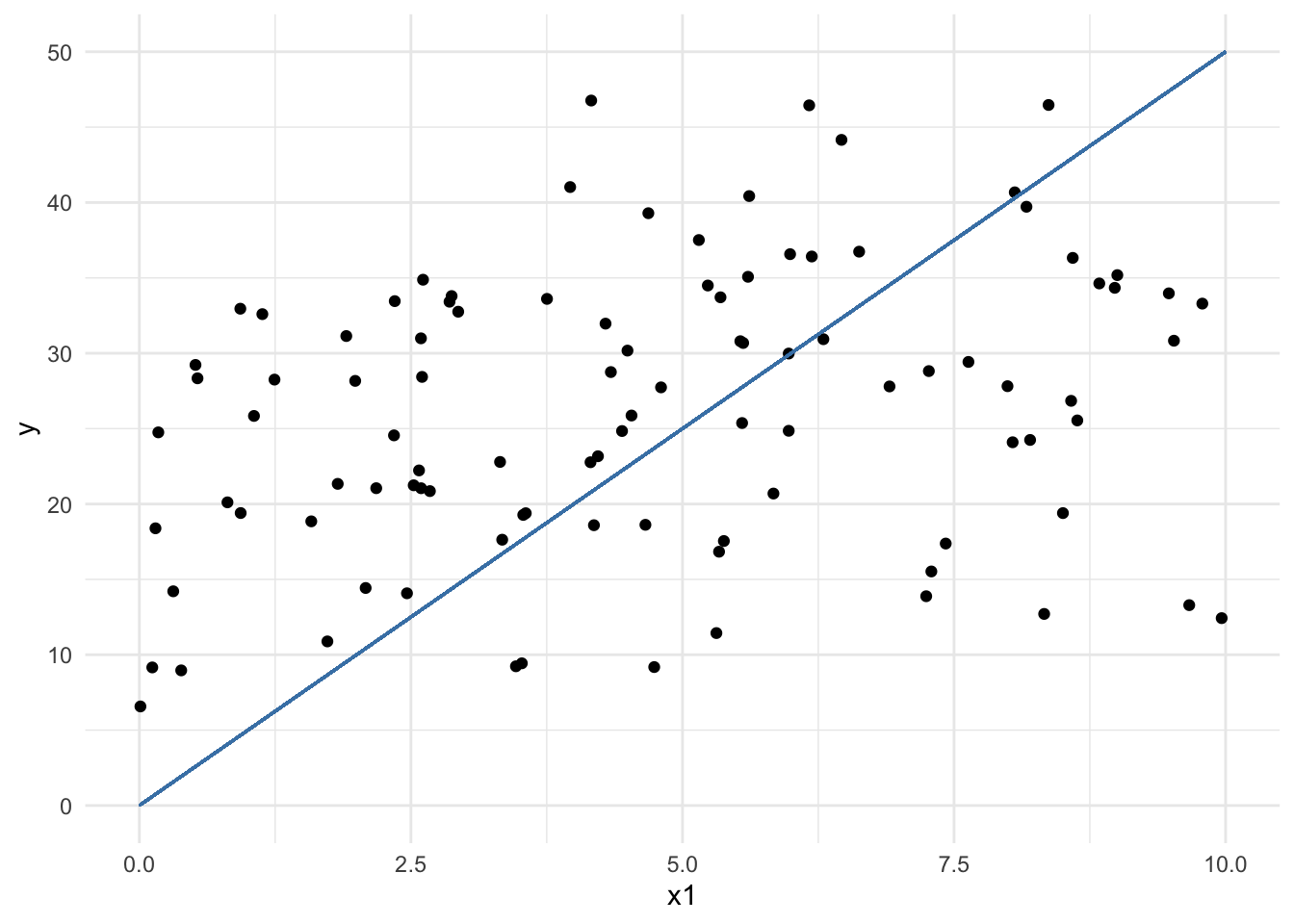

To make talking about these easier, here is a plot that might be useful.

Code

In order to access these residuals, we can “augment” the dataframe that we used in the model, using the broom::augment function call.

Because the \(Y\) variable wasn’t included in the regression for model_aux we have to bring of over from the main dataset, which is a little bit… um, hacky. Forgive these sins.

## # A tibble: 100 × 10

## x1 x2 x3 .fitted .resid .hat .sigma .cooksd .std.resid y

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 2.57 2.02 5.86 5.05 -2.47 0.0208 2.76 0.00582 -0.906 22.2

## 2 3.53 0.630 6.12 5.23 -1.70 0.0344 2.77 0.00466 -0.626 19.3

## 3 1.73 1.91 3.13 4.92 -3.19 0.0286 2.75 0.0135 -1.17 10.9

## 4 5.98 1.63 7.66 5.19 0.788 0.0312 2.77 0.000906 0.290 30.0

## 5 2.94 3.96 8.18 4.93 -2.00 0.0246 2.77 0.00451 -0.733 32.8

## 6 4.53 8.94 3.17 4.06 0.471 0.0383 2.77 0.000402 0.174 25.9

## 7 0.811 8.74 2.40 4.04 -3.23 0.0401 2.75 0.0199 -1.20 20.1

## 8 0.517 8.67 4.13 4.14 -3.63 0.0326 2.75 0.0200 -1.34 29.2

## 9 4.34 1.34 8.09 5.25 -0.908 0.0369 2.77 0.00143 -0.335 28.8

## 10 8.17 7.79 6.39 4.37 3.80 0.0276 2.75 0.0184 1.40 39.7

## # ℹ 90 more rowsFinally, with this augmented data that has information from the model, we can estimate the model that includes only the residuals as predictors of \(Y\).

## (Intercept) .resid

## 26.21733 1.01098Our claim was that the coefficients from model_main and model_two should be the same.

Code

## Test passed 😀But, why is this an interesting, or at least useful, feature to appreciate?

This is actually a really famous, relatively recently “rediscovered” proof. If we have a number of variables, one called an outcome and the rest called features, then we can estimate the relationship between the outcome and one feature in the following way:

- Estimate the relationship between all the other features and the one that we’re examining; save the information about the feature we’re examining which cannot be explained by the other features in some vector.

- Regress the outcome on this leftover information.

Slightly more of what is happening? In the first model, the leftover information is orthogonal to the information posessed in the regression features. In the second model, we can use this orthagonal information to estimate the effect of one variable.